Why do accurate 3D simulations depend on computing power?

Airbus Protect’s Sustainability Business Unit studies various industrial risk scenarios, including toxic dispersion, fire and explosion. To do this, we use accurate 3D simulations. Creating these efficiently (with acceptable restitution time) relies on computing power.

Computing power is particularly important when computational fluid dynamics (CFD) is involved. CFD is a branch of fluid mechanics that uses numerical analysis and data structures to analyse and solve problems that involve fluid flows. Computers are used to perform the calculations required to simulate fluid flow and the fluid’s interaction (liquid and gas) with surfaces defined by boundary conditions.

Whether our team is analysing the impact of a leak of toxic gas or a chemical fire, simulating these phenomena to perform a quantitative risk assessment may require computational fluid dynamics to achieve a high level of accuracy or to get a deep understanding of the underlying phenomena.

Is it possible to deepen the accuracy of simulations?

Yes, a common way to deepen the accuracy and complexity of simulations is to increase model size. This depends on finetuning the size of the ‘computational domain’ – where the solution of a CFD simulation is calculated.

Computational fluid dynamics typically involves ‘meshing’ the domain – describing the geometry and the fluid flow with a multitude of points, called nodes. The volume occupied by the fluid is divided into discrete cells, and the governing equations (e.g. Navier-Stokes equations) are then solved over the cells and nodes.

Increasing the size and complexity of the numerical models implies raising the number of nodes and cells, as well as adding complexity to the governing equations. Obviously, the computing power required is directly proportional to these parameters. As such, the computing cost of accurate CFD simulations can rapidly become prohibitive, with typical restitution times ranging from several hours to several days.

So, how can we reduce restitution times and improve accuracy?

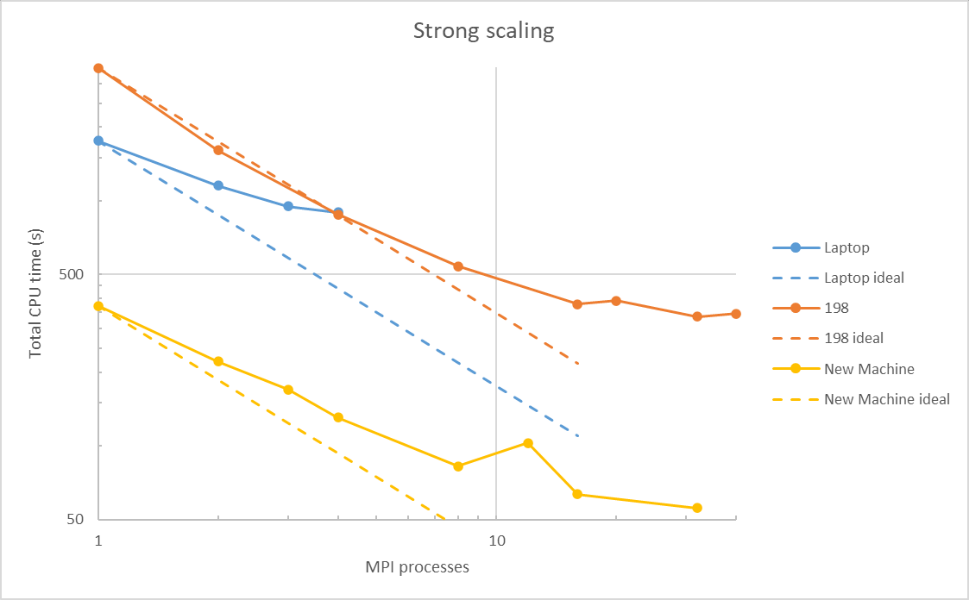

One of the most efficient ways to reduce the restitution time of simulations is to divide the computational domain into several sub-domains that are distributed over multiple processors or computing cores. In theory, a simulation into four sub-domains and running it on four cores should make the duration of simulations four times shorter. However, it’s impossible to quite reach this ‘ideal’ behaviour due to limitations like computing imbalance and communication between cores.

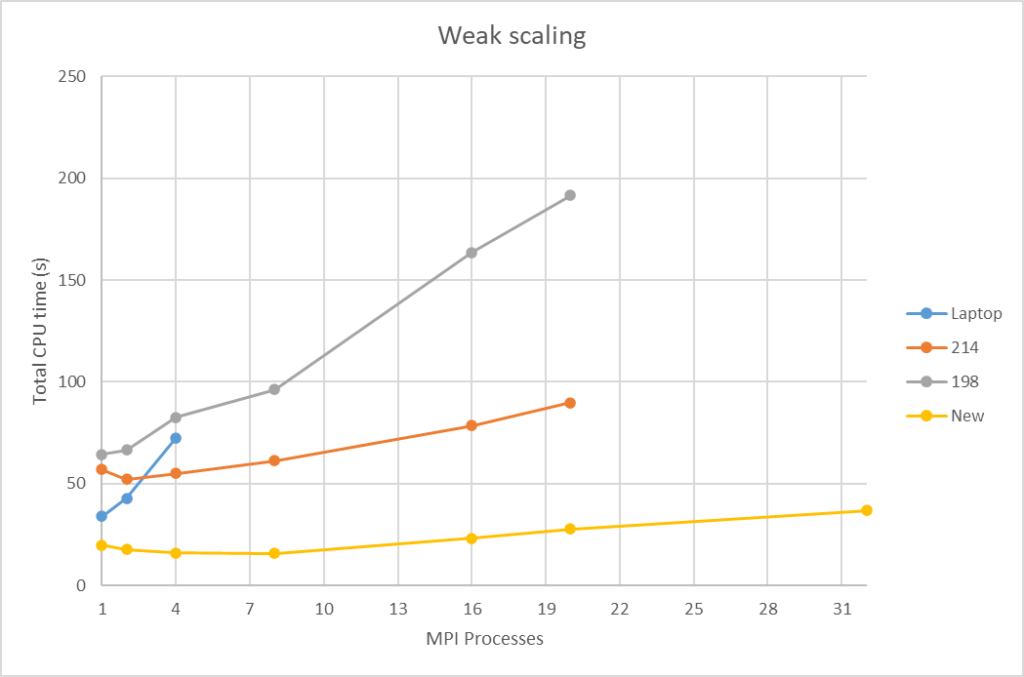

With this in mind, we perform scaling tests to investigate a computer and a program’s capability to deliver optimum performance. These typically fall into two groups – weak scaling tests and strong scaling tests. In a weak scaling test, the size of the problem is increased alongside the number of computing cores. This results in a constant workload per processor. On the other hand, in a strong scaling test, the problem continues to be divided as the number of computing cores increases. In this instance, ideal behaviour is a computation duration that’s divided exactly by the number of cores.

You can see the expected ideal performances for both tests in the following diagram.

To deliver better restitution time and more accurate simulations, Airbus Protect’s Sustainability BU has recently acquired a new scientific computer to support industrial risk studies. Embedding 32 computing cores, 128 GB of RAM and a powerful AMD MI210 GPU with 64 GB of VRAM, this new architecture dramatically raises the computing resources of the team.

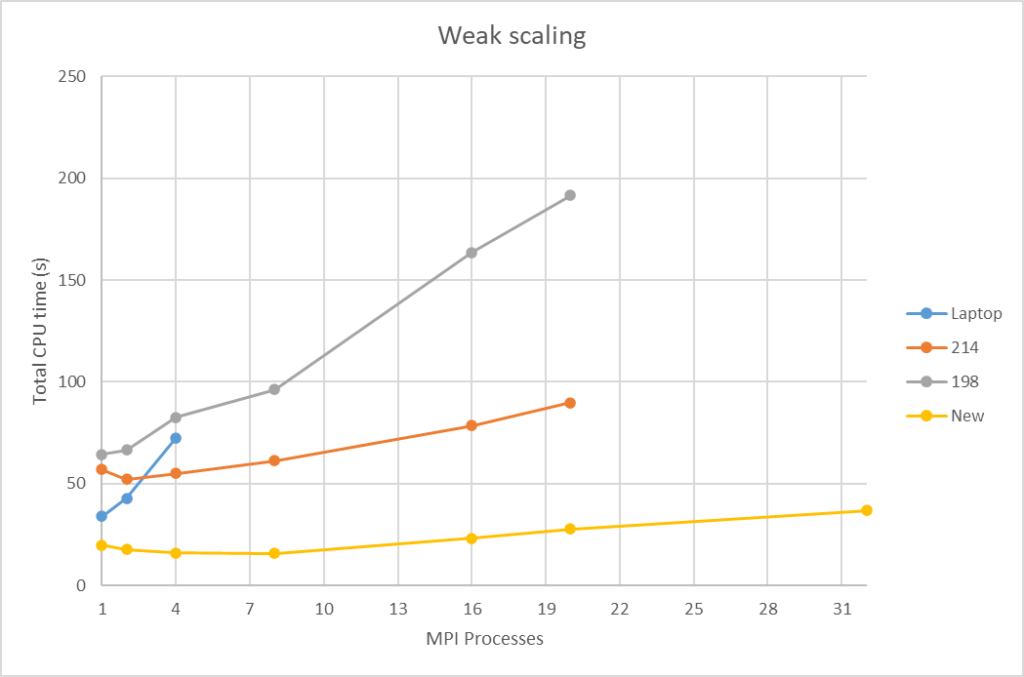

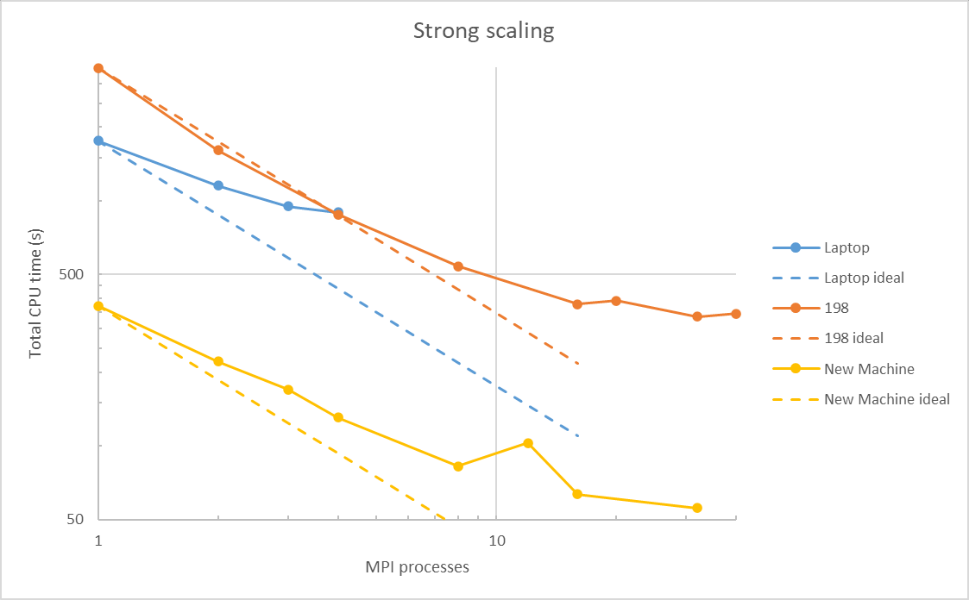

This is demonstrated in the below diagrams, which compare previously available architectures (198 and 214) and last generation laptops to Airbus Protect’s new computer for both weak and strong scaling tests. To create these diagrams, we used Fire Dynamics Simulator (FDS) software, developed by the US National Institute of Standards and Technology (NIST). FDS is a Large Eddy Simulation (LES) code for low-speed flows, with an emphasis on smoke and heat transport from fires.

As you can see, Airbus Protect’s new architecture is the fastest on a single core – between two and nine times faster, to be precise! Even more interestingly, it also behaves much closer to ideal performance when increasing the number of cores for both scaling tests.

Overall, this new scientific computer strongly reinforces our capacity to offer customers faster and more detailed simulations.

Did you find this blog interesting? Learn more about Airbus Protect’s industrial risk and modelling services.

Sustainability

Sustainability