The EU AI Act has come into force! Here’s what you need to know.

After long negotiations and discussions between European institutions and bodies, the EU AI Act came into force on August 1, 2024. While Artificial Intelligence offers vast potential and commercial opportunities, the ethical implications and inherent risks of AI mean it must be used responsibly and in moderation. The EU AI Act establishes clear guidelines to ensure businesses apply the nascent technology appropriately and prevent complications in the long run.

Understanding the EU AI Act in 5 steps

1. The objective of the AI Act

The EU AI Act is the world’s first comprehensive regulation on Artificial Intelligence, designed to combat and mitigate potential ethical and safety risks according to their severity. The objective is to ensure AI systems are safe, trustworthy, and uphold fundamental rights.

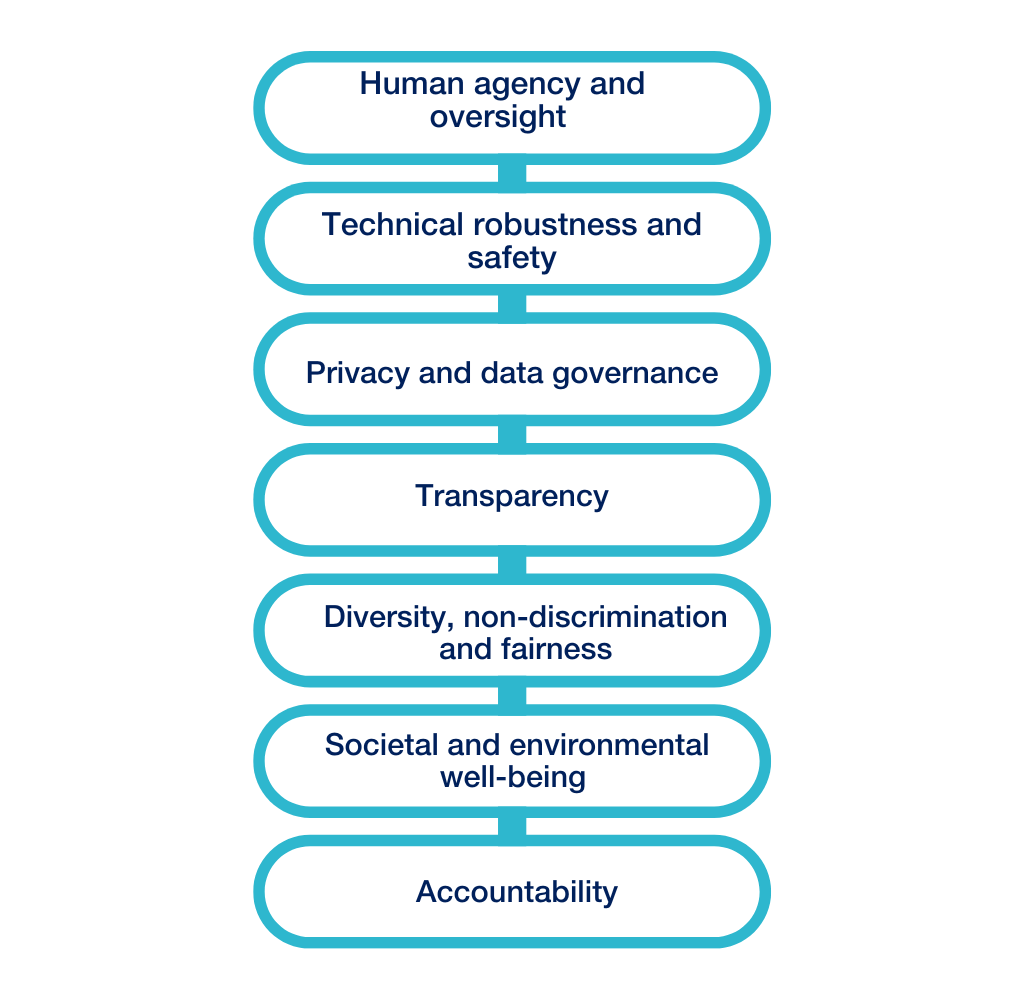

The regulation builds upon seven foundational principles from the High-Level Expert Group (HLEG) and translates them into concrete obligations to unify the approach to AI governance across the EU. The key requirements are:

Despite being an EU regulation, the EU AI Act has an extraterritorial application. While it applies to AI systems developed and used within the European market, it also applies to non-EU entities if their AI systems impact European citizens. However, there are specific exemptions, such as R&D without deployment or placement on the market, exclusive military purposes or personal use.

But how do we define an “AI system”? The regulation gives us a specific—but very broad—definition composed of three cumulative criteria:

- Autonomy: a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment.

- Generation of outputs: processes input to produce a range of outputs, including predictions, content, recommendations, or decisions, either through explicit commands or inferred objectives.

- Influence: outputs can influence physical or virtual environments.

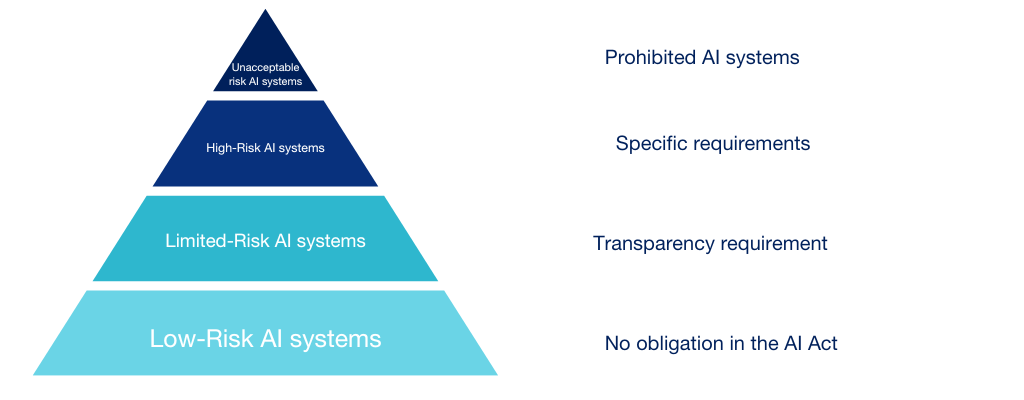

2. Categorisation based on risk severity

AI systems are ranked at different levels according to the potential risks they may present to health, safety and fundamental rights. The categories are as follows:

Unacceptable risk AI systems

Some AI systems are deemed to pose an unacceptable risk to people’s safety and fundamental rights. To prevent abuse and uphold human dignity and privacy, the regulation prohibits AI systems that:

- Manipulate or deceive

- Exploit vulnerabilities related to age, disability or socio–economic circumstances

- Categorise using biometric systems inferring sensitive attributes

- Perform social scoring

- Assess risks for criminal behaviour based on profiling and personal traits

- Compile facial recognition database

- Deduce emotions in workplaces except for medical or safety reasons

- Identify individuals in real time though remote biometric identification in public spaces

High-risk AI systems

High-risk AI systems are those that can cause significant risk of harm to the health, safety of fundamental rights of natural persons. The regulation defines these systems as those intended to be safety components of products subject to specific EU legislation, including civil aviation safety. Furthermore, the regulation lists specific domains classified as High-risk AI systems, which include:

- Biometry under precise conditions

- Critical infrastructures

- Education and professional training

- Recruitment and employee management

- Access and right to essential private and public services

- Law enforcement

- Migration, asylum and border control management

- Administration of justice and democratic processes

Limited-risk and minimal-risk AI systems

Limited-risk AI systems must follow the principle of transparency. This means users must be informed as to whether they are interacting with AI, for example, when using chatbots or encountering deepfakes.

AI systems considered minimal risk are not directly regulated by the EU AI Act and can be developed as long as they comply with existing regulations. Examples of minimal risk systems include those used for video game development and anti-spam filters.

3. Specific requirements for each risk category

Across all categories, high-risk AI systems are inheriting the most obligations. These include implementing and maintaining an iterative risk-management system, which must be documented alongside a quality management system to ensure the upkeep of policies and procedures. Not only is there a strong link between the AI Act and data privacy laws, for example, through the necessity to comply with the GDPR when processing personal data, but data governance has a strong role to play. To adhere to the new EU AI regulation, high-risk systems are required to provide high-quality datasets for training, validation and testing. In some cases, a Fundamental Right Impact Assessment (FRIA) must be conducted, which can be combined with the Privacy Impact Assessment (PIA), if applicable.

Before an AI system enters a service, a technical document must be kept up to date and demonstrate the AI system’s compliance to authorities. In particular, providers of high-risk AI systems must show adherence to requirements for log monitoring, accuracy, robustness and cybersecurity. Transparency in the design and development phases of high-risk AI systems is essential to enable deployers to interpret and appropriately use a system’s output. In addition, human oversight during the AI’s operation must be ensured, particularly by means of man-machine interfaces.

On top of identifying the relevant risk level, an organisation must understand its precise role provided to identify the applicable requirements. Organisations can be:

- Providers: Develops, places on the EU market or uses an AI system within its own architecture.

- Deployers: Integrates an existing AI system into its own setup and processes.

- Importers: Located in the EU and uses an AI system from outside the EU.

- Distributors: Part of the supply chain (other than the provider or importer) and makes an AI system available on the EU market.

4. Specific treatment for General Purpose AI (GPAI)

GPAI systems are AI systems trained on vast amounts of data and capable of performing numerous tasks for (and across) various applications. These systems aim to achieve a level of adaptiveness and reasoning comparable to human intelligence.

Providers of these AI systems have a distinct regime that obliges them to comply with certain high-risk-related requirements, such as establishing technical documentation, being transparent towards deployers and respecting the copyright directive.

Some GPAI can present a systematic risk due to the impact on the EU market or negative effects on public health, safety, public security, fundamental rights or society as a whole. These systems face heightened scrutiny, with the relevant authorities closely monitoring their compliance with stricter obligations.

5. Governance, sanctions and deadlines

Institutional governance will be enforced by the creation of the AI Office, which will supervise and control the implementation of the regulation concerning GPAI. The European Artificial Intelligence Board, composed of representatives from each Member State, will be responsible for coordinating national authorities and providing technical and regulatory expertise. Similarly to the European Data Protection Board (EDPB) in its own field, this board will also offer advice, issuing recommendations and written opinions on relevant issues relating to the regulation. At the national level, authorities will be designated by each EU member state to ensure and support compliance of organizations within their respective countries.

In terms of sanctions, the AI Act provides a tiered penalty system based on the severity of non-compliance. Fines range from 3% of the company’s global turnover or 15 million euros, up to 7% or 35 million euros, retaining the highest amount. In comparison, the GDPR fines range from 2% to 4% of global turnover.

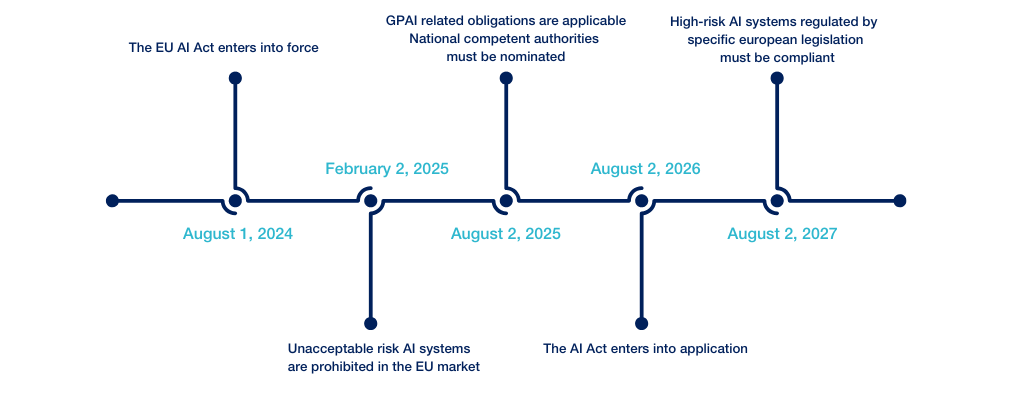

What’s the timeline? Starting August 1, 2024, the AI regulation comes into force with a gradual application, with the riskiest use cases as the first point of call.

The countdown has begun. It’s time to get up to speed!

- Share